Ball catching delta robot in LEGO

Ball catching delta robot in LEGO

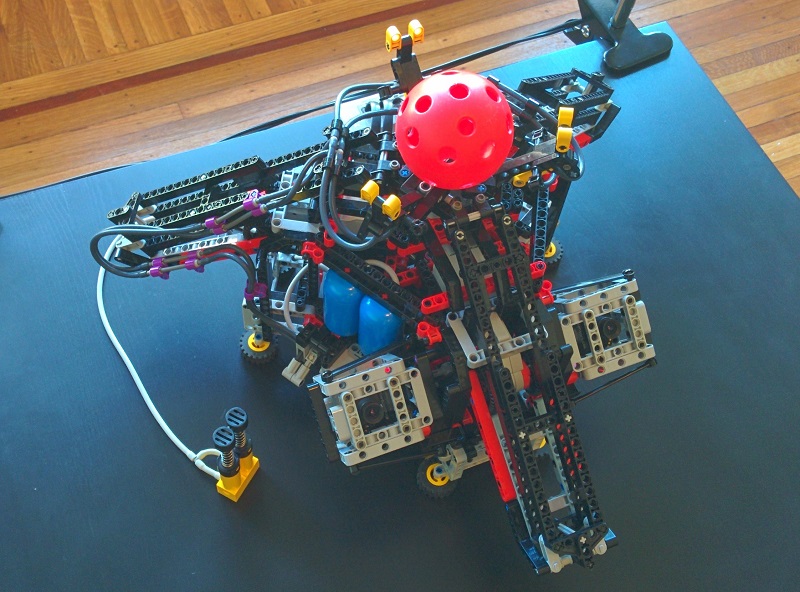

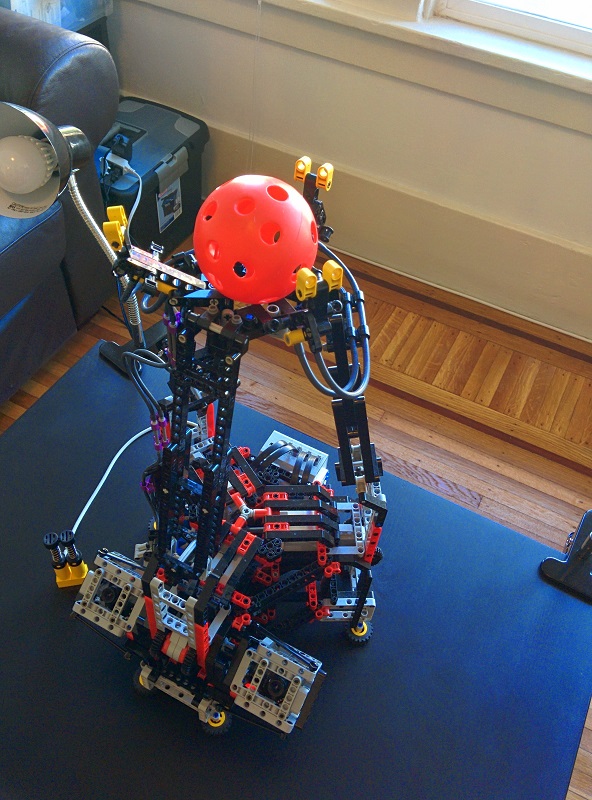

This is a delta robot built with LEGO Mindstorms to catch a ball thrown through the air. The robot is programmed using ev3dev, an alternative operating system for the EV3 brick. It uses two NXTcam cameras to track the ball and estimate its trajectory in 3D.

Here is a video of the robot in action.

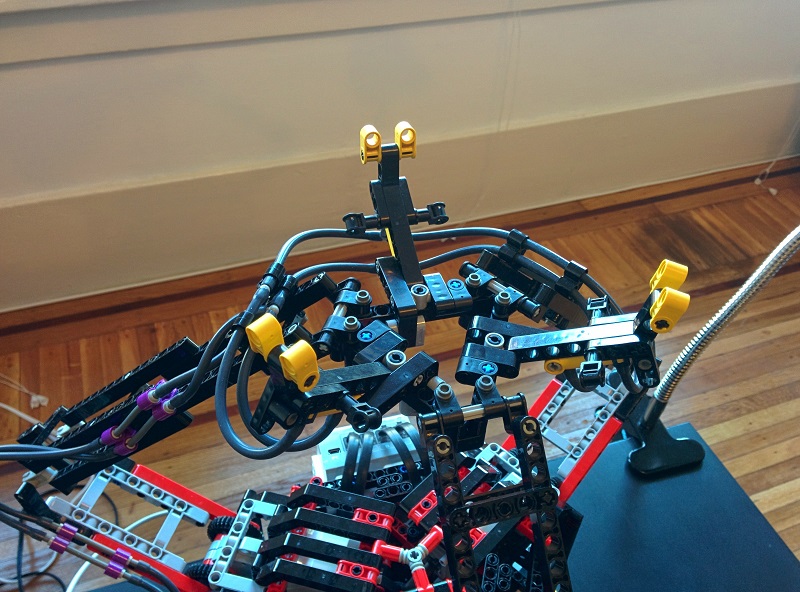

Lightweight pneumatic hand mechanism for catching the ball

Lightweight pneumatic hand mechanism for catching the ball

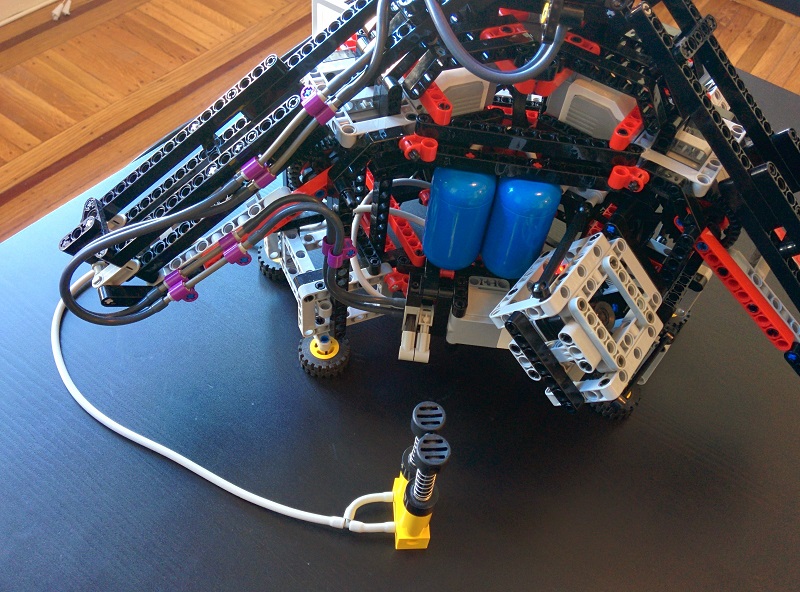

In order to catch a ball, a robot needs to be able to move very quickly and accurately. This is why I chose a delta robot design, and (over-)built it to be very stiff. This is also the motivation for using pneumatics to control the hand of the robot: the pneumatics have very low mass and move quickly. All of the heavier parts controlling the hand are located on the base of the robot where mass is less of a concern. The robot has a range of about 50 LEGO studs (about 40 cm) horizontally, and it can reach about 40 studs above the top of the platform.

Valve and motor for controlling the hand

Valve and motor for controlling the hand

Most of the hard problems in getting this to work are in the software for tracking the ball and planning motions for executing a catch.

The biggest challenge to get this to work was the camera calibration. Calibration is the process of determining the camera parameters that define how positions in the world are mapped to pixel coordinates on the sensor of a camera. I use the pinhole camera model, with a very simple radial distortion model.

Calibrating NXTcam (or similar object detecting cameras) is very difficult because this type of camera does not provide images, only object tracking data. This means the standard calibration tools and packages that use known relative feature positions (e.g. OpenCV) cannot be easily used. In addition to this, NXTcam is very low resolution. Ideally, camera calibration would be done with subpixel accurate feature extraction, especially for such a low resolution device like NXTcam.

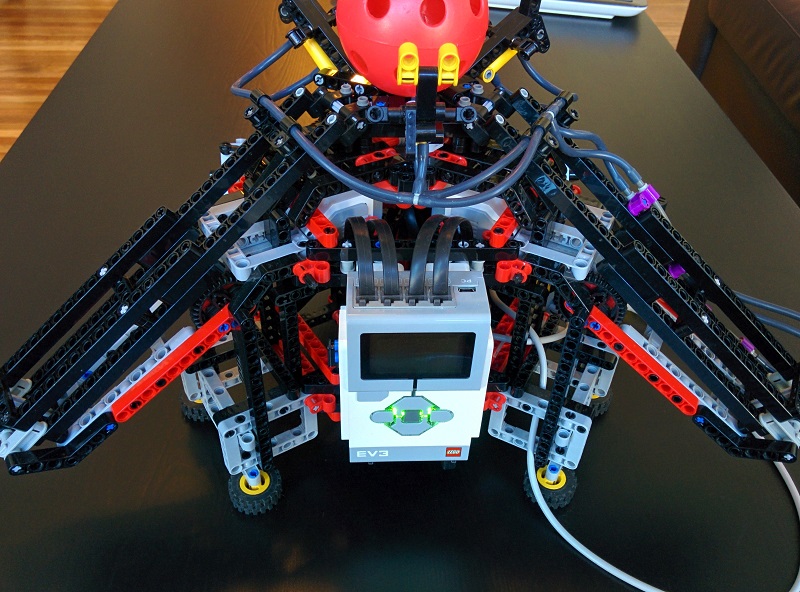

Back of the delta robot

Back of the delta robot

My solution for the calibration problem is to use a different constraint. I use object tracking data from a ball tied to one end of a string, and the other end of the string is fixed to some known position relative to the robot. Moving the ball in the stereo field of view while keeping the string taught gives tracking observations of an object known to lie on a sphere. The calibration is then formulated as an optimization problem to find the camera parameters that minimize the distance from the surface of the sphere to the estimated 3D position of the ball. This calibration procedure is finicky and took me several attempts to get a good calibration. It helps to use observations from several different spheres, the more the better. It’s also important to use inelastic string, such as kite string.

Delta robot extended to its maximum reach position

Delta robot extended to its maximum reach position

Once the cameras are calibrated, the next problem is estimating the trajectory of a ball flying through the air. This is difficult because the NXTcam frames are not synchronized, so we cannot assume that the object detected by two NXTcams is at the same 3D position. This means that the most obvious approach of computing the 3D position of the ball at each pair of camera observations and fitting a trajectory to these positions is not viable.

To work around this, I set up trajectory estimation as an optimization problem where the objective function is the reprojection error between the current estimate of the trajectory sampled at the observation time and the observations from the cameras. This formulation allows for a new variable representing the unknown time shift between the two sets of observations. See estimating the 3D trajectory of a flying object from 2D observations for more information. Despite lacking floating point hardware, the EV3 processor can solve this nonlinear optimization problem in 40-80 ms (depending on initialization quality), which is fast enough to give the robot enough time to move to where the trajectory meets the robot.

The robot is programmed to begin moving to where the trajectory is expected to intersect the region reachable by the robot as soon as it has the first estimate of the trajectory. As more camera observations take place, the estimated trajectory can be improved, so the robot continues moving to the expected intersection of the refined trajectory and the reachable area. To increase the window of time for catching the ball, the robot attempts to match the trajectory of the ball while closing the “hand” as the ball arrives. This reduces the risk of the ball bouncing out of the hand while attempting to catch.

Putting it all together, the robot can catch maybe 1 out of 5 throws that it should be able to catch (those within its range). I wasn’t sure if it would be even remotely possible to catch a ball with a LEGO robot, so while 1 out of 5 doesn’t sound amazing, I’m happy with it!

The code to run this robot is available on github.